Today’s smart new television sets are trying to win your business with amazingly brilliant colors and superfine resolutions that increasingly go beyond the ability of the human eye to detect. Yes, they are that good and only getting better all the time.

So What Is Resolution?

Resolution is the amount of detail you can see on the screen. Each individual spot on a TV screen has a set of coordinates attached to it. Each spot needs both a horizontal address and a vertical one in order to put the requisite burst of light onto the screen in that exact spot.

TV sets today are classified by the number of pixels in each individual line of horizontal resolution moving from side to side across the screen. A screen that is rated at, for example, 4000 pixels has 4000 individual points on each horizontal line.

In turn, each of those 4000 horizontal coordinates has a vertical axis crossing them at that point. This consists of the vertical line of the resolution, also calculated in the number of pixels on the line, typically about 9/16ths of the number of horizontal ones. This disparity is because modern TV sets are wide aspect units providing a 16:9 differential between horizontal width and vertical height.

Adding up all of the pixels in a horizontal line of resolution and multiplying it by the number of pixels in a vertical line of resolution provides the total number of pixels contained on the screen of any particular television.

HD or not HD? That Is The Resolution

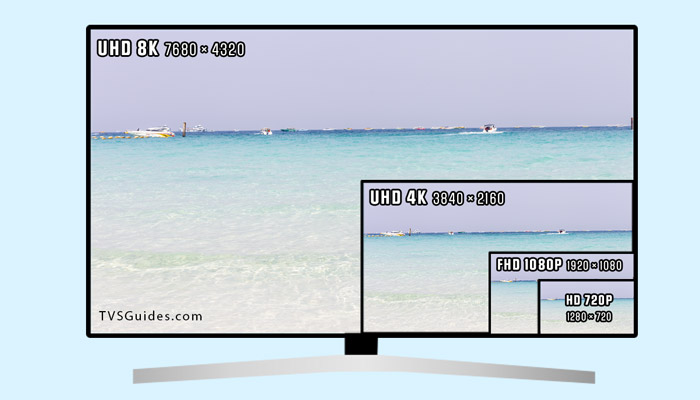

Since High Definition televisions came onto the market, the number of pixels has been multiplying with every new generation of sets. The original HD standard was 720 pixels per VERTICAL line (aka 720p) and had a horizontal count of 1280 pixels. This 1280 x 720 ratio meant that these sets had a total pixel count of slightly under a million pixels.

The next upgrade was to what become known as Full HD, which bumped the VERTICAL count up to 1080 (1080p) and the horizontal count to 1920. This created an image with just over two million pixels.

As screens continued to get larger, the industry abruptly moved to count pixels on the HORIZONTAL axis rather than the vertical one. This may have been a marketing ploy since the horizontal count is much larger than the vertical one so that the screens might appear larger and more detailed than they truly were.

After Full HD, the next move was up to the so-called Ultra High Definition, also known as UHD or 4K sets. There was also a bit of a speed bump in that both computer monitors and movie projectors moved into several variants of 2K but none of these ever became television standard ratios. UHD, which is the current new TV standard, is 3840 x 2160 or somewhat over 8 million pixels.

At the present time, super pricey 8K UHD sets are being rolled out. These offer a 7680 x 4320 ratio or about 33 million pixels. As can be seen, the move to rating sets as “K” variants is now referring to the approximate number of pixels in the horizontal lines and not in the total pixel count.

More Is Not Necessarily Better

The important thing to recognize about the explosion in pixel counts is that this is not so much a question of more pixels equaling better picture quality but rather that more pixels equal bigger screen size. As each new generation of screens gets larger, that fixed number of pixels gets stretched out a little bit each time the screen size rises.

This is why there is a continuing rise in the total number of pixels. Putting 2 million pixels on a 32-inch screen leaves them densely packed. Put the same 2 million pixels on a 60-inch screen and they are much more loosely arranged. Putting 8 million pixels on a 55-inch screen is also densely packing them in.

Stretch that screen out to 60 or 65 inches and things are already starting to get slightly degraded resolution-wise, albeit not so much that the naked eye can really tell the difference. By the time you get to an 80 or 100 inches screen, a still-higher resolution will be needed just to maintain the same picture quality. The 33 million pixels of an 8K set are thus badly needed for the huge new monster televisions.

Output Equals Input

Another important factor to consider is that many television formats, both broadcast, and cable, are not especially up-to-date. Even with the highest quality set, receiving a lower-resolution image from your provider is not going to allow your set to show its virtues quite as well as might be imagined.

The very best viewing has to start with the best screen, of course. Yet it also depends on the best possible input. Until the US upgrades to the next generation ATSC 3.0 broadcast standard, no matter what your TV is rated at, it is going to see nothing better than a 2K image at best and quite possibly something much less sharp, since different broadcast and cable networks use formats starting at 720p for much of their programming. This is all technically “HD”, but it is a very marginal low-end HD signal that has been largely superseded by higher quality formats outside of broadcast or cable reception.

Leave a Reply